Data integration architecture serves as the backbone for modern organizations, enabling seamless data flow across various systems. It consolidates data from multiple sources, providing a unified view that enhances decision-making and operational efficiency. Organizations benefit from improved data quality, faster insights, and enhanced collaboration. By automating data workflows, businesses reduce manual tasks and improve data security. FineDataLink exemplifies these principles by offering a low-code platform that simplifies complex data integration tasks, ensuring real-time synchronization and advanced ETL & ELT development.

Data integration architecture refers to the structured framework that facilitates the seamless flow of data across various systems within an organization. It encompasses the processes, tools, and technologies that enable the collection, transformation, and delivery of data from disparate sources to target systems. This architecture serves as a bridge, connecting different data environments to ensure interoperability and efficient data exchange.

The scope of data integration architecture extends beyond mere data movement. It involves ensuring data quality, consistency, and accessibility across the organization. By integrating data from multiple sources, organizations can create a unified view that supports comprehensive analytics and informed decision-making. This architecture plays a crucial role in eliminating data silos, thereby enhancing collaboration and operational efficiency.

The primary objectives of data integration architecture revolve around aligning data initiatives with business goals and priorities. Organizations must define clear objectives to guide their integration efforts effectively. These objectives typically include:

To achieve these objectives, organizations must start with a clear integration strategy. This strategy should outline the scope of the integration, identify key stakeholders, and define the specific data sources and target systems involved. By establishing well-defined goals, organizations can ensure that their data integration architecture aligns with their overall business strategy, leading to successful data-driven initiatives.

Data integration architecture plays a pivotal role in enhancing data accessibility within organizations. By consolidating data from various sources, it ensures that stakeholders can access the information they need when they need it. This accessibility empowers employees to make informed decisions quickly, leading to improved operational efficiency. Organizations with robust data integration architecture often experience fewer data silos, which facilitates seamless data sharing across departments.

"Organizations with data integration architecture benefit from improved data quality, governance, operational insights, and transformative outcomes compared to those without."

Effective decision-making relies heavily on accurate and timely data. Data integration architecture provides a unified view of data, enabling organizations to perform advanced analytics and derive actionable insights. This comprehensive view supports strategic planning and helps identify trends and patterns that might otherwise go unnoticed. By automating data workflows, organizations can ensure that decision-makers have access to the most current data, reducing the risk of errors and enhancing the overall quality of decisions.

In today's fast-paced business environment, agility is crucial. Data integration architecture supports business agility by building agile and resilient data pipelines. These pipelines allow organizations to adapt quickly to changing market conditions and business needs. By eliminating data silos and supporting interoperability, organizations can streamline processes and respond to new opportunities more effectively. A scalable architecture ensures that as the organization grows, its data integration capabilities can expand to meet increasing demands.

"Organizations with data integration architecture can build agile and resilient data pipelines, automate data workflows, eliminate data silos, support interoperability, perform advanced analytics, establish standardized processes, support real-time data processing, and build a scalable architecture compared to those without."

Data integration architecture comprises several essential components that work together to ensure seamless data flow and transformation across systems. Understanding these components helps organizations build a robust framework for data management and analytics.

Data sources form the foundation of any data integration architecture. They include various origins from which data is collected, such as databases, cloud services, applications, and external data feeds. Organizations often deal with diverse data formats, including structured, semi-structured, and unstructured data. Identifying and cataloging these sources is crucial for effective data integration. By understanding the nature and characteristics of each data source, organizations can tailor their integration strategies to ensure comprehensive data coverage and accessibility.

Transformation engines play a pivotal role in data integration architecture. These engines consist of algorithms and tools designed to move and transform data from one source to another. They handle various data types and formats, ensuring that data is accurately mapped, cleansed, and enriched. Transformation engines enable organizations to convert raw data into meaningful insights by applying business rules and logic. They support data quality initiatives by identifying and rectifying inconsistencies, thus ensuring that the integrated data meets organizational standards.

Integration layers serve as the intermediary between data sources and target systems. They facilitate the seamless flow of data by providing a structured pathway for data movement. Integration layers often include middleware solutions that manage data routing, transformation, and delivery. These layers ensure that data is transmitted efficiently and securely, minimizing latency and maximizing throughput. By implementing robust integration layers, organizations can achieve interoperability between disparate systems, enabling them to harness the full potential of their data assets.

"Integration layers provide a structured pathway for data movement, ensuring efficient and secure data transmission."

Automation plays a crucial role in enhancing the efficiency and reliability of data integration architecture. By automating repetitive tasks, organizations can streamline data processes, reduce manual errors, and ensure consistent data quality. Automation not only accelerates data processing but also frees up valuable human resources for more strategic activities.

Data Integration Specialist: "Using automation, data integration architecture can simplify the process of integrating data between multiple systems. Automation allows you to define reusable rules to quickly and accurately move data between systems, reducing the time it takes to develop integrations."

Several tools and technologies support automation in data integration architecture. These tools streamline workflows, enhance data quality, and ensure seamless data flow across systems.

Automated Data Processing Specialist: "Automating data processing means using software to handle the repetitive tasks involved in transforming raw data into useful insights. This can include tasks like cleaning, transforming, analyzing, and reporting on data."

By leveraging these tools and technologies, organizations can build a robust data integration architecture that supports efficient data management and analytics. Automation not only enhances data quality and reduces operational costs but also empowers businesses to respond swiftly to market changes and internal demands.

Organizations must first understand their business needs to align data integration architecture with business goals. This understanding involves identifying key objectives and challenges that the organization faces. Data architects play a crucial role in this process. They collaborate with stakeholders to gather insights into the organization's strategic priorities. By doing so, they ensure that the data integration strategy supports these priorities effectively.

Data architects often act as project managers. They coordinate between teams and set timelines to ensure that deliverables align with business intelligence objectives. Their expertise in methodologies like Agile and DevOps allows them to adapt projects to evolving business goals. This adaptability is essential in today's fast-paced business environment, where swift deployment of projects is key.

"Architects often play the role of project managers, setting timelines, coordinating between teams, and ensuring that deliverables align with business intelligence objectives."

Designing a scalable and flexible data integration architecture is vital for long-term success. Scalability ensures that the architecture can handle increasing data volumes as the organization grows. Flexibility allows the architecture to adapt to changing business needs and technological advancements.

Data architects must consider several factors when designing for scalability and flexibility. They need to choose technologies and tools that support seamless data flow and integration. These tools should accommodate diverse data formats and sources, ensuring comprehensive data coverage. Additionally, architects must implement robust integration layers that facilitate efficient data movement and transformation.

"In agile business environments, the swift deployment of projects is key. Data integration architects, familiar with Agile and DevOps methodologies, ensure that data-driven projects are rolled out efficiently."

By focusing on scalability and flexibility, organizations can build a data integration architecture that not only meets current needs but also adapts to future challenges. This approach empowers businesses to respond swiftly to market changes and internal demands, maintaining a competitive edge.

Data integration techniques play a crucial role in managing and processing data from various sources. These techniques ensure that data is accessible, consistent, and ready for analysis. Among the most prominent methods are ETL, ELT, and Data Virtualization. Each technique offers unique advantages and is suited to different organizational needs.

ETL stands as a traditional yet foundational approach in data integration. It involves three key steps: extracting data from source systems, transforming it into a suitable format, and loading it into a target system, such as a data warehouse. This method ensures data quality and consistency by cleaning, filtering, and enriching data during the transformation phase.

Key Benefits of ETL:

ETL tools automate these processes, significantly reducing manual efforts and improving data movement efficiency. Organizations rely on ETL to integrate data seamlessly from various sources into a cohesive data warehouse.

ELT represents a more modern approach, leveraging the processing power and scalability of target systems, often in cloud environments. Unlike ETL, ELT first extracts raw data and loads it into the target system, where transformation occurs as needed. This sequence change offers flexibility and autonomy to data analysts, allowing them to perform complex transformations directly within the target system.

Advantages of ELT:

ELT's ability to handle large volumes of data makes it ideal for organizations with extensive data integration needs. By utilizing the target system's capabilities, ELT supports complex transformations and advanced analytics.

Data Virtualization offers a different approach by providing a unified view of data without physically moving it. This technique allows users to access and query data from multiple sources in real-time, creating a virtual layer that integrates disparate data systems.

Benefits of Data Virtualization:

Data Virtualization empowers organizations to perform advanced analytics without the overhead of traditional data integration methods. It supports interoperability and enhances data accessibility, making it a valuable tool for dynamic business environments.

Data integration architecture employs various patterns to manage and streamline data flow across systems. These patterns provide frameworks that ensure efficient data processing and accessibility. Understanding these data integration architecture helps organizations choose the right approach for their data integration needs.

The Hub-and-Spoke model stands as a prominent architectural pattern in data integration. This model centralizes data management by using a central hub to process and route data from multiple sources, known as "spokes." The hub acts as the nerve center, ensuring that all data flows through a single point before distribution to various systems.

"The Hub-and-Spoke architecture centralizes data integration, acting as the nerve center for data flows."

Data Lake Architecture offers a flexible approach to data integration. Unlike traditional models, it stores raw data in its native format, allowing for diverse data types and structures. This architecture supports advanced analytics and real-time data processing.

Data Lake Architecture empowers organizations to harness the full potential of their data assets, facilitating innovative data-driven solutions.

Real-world examples illustrate the effectiveness of these architectural patterns in data integration. Many organizations adopt the Hub-and-Spoke model to streamline data management and enhance operational efficiency. For instance, a global retail company might use this model to integrate sales data from various regional stores into a central system for analysis and reporting.

Similarly, tech companies often leverage Data Lake Architecture to support big data initiatives. By storing vast amounts of raw data, they can perform complex analyses and derive insights that drive innovation and growth.

These examples demonstrate how architectural patterns in data integration can transform data management, enabling organizations to achieve their strategic objectives.

FanRuan Software Co., Ltd. stands as a leader in data analytics and business intelligence solutions. Originating from China, the company has carved a niche in the global market by focusing on innovation and customer-centric solutions. FanRuan empowers businesses with data-driven insights, facilitating informed decision-making and strategic growth. With over 30,000 clients and more than 92,000 projects implemented worldwide, FanRuan has established itself as a prominent player in the business intelligence sector.

The company's commitment to excellence is evident in its extensive workforce of over 1,800 employees and a user base exceeding 2.5 million. FanRuan's solutions are designed to address the challenges of data integration, data quality, and data analytics. By leveraging cutting-edge technology, FanRuan ensures that organizations can harness the full potential of their data assets.

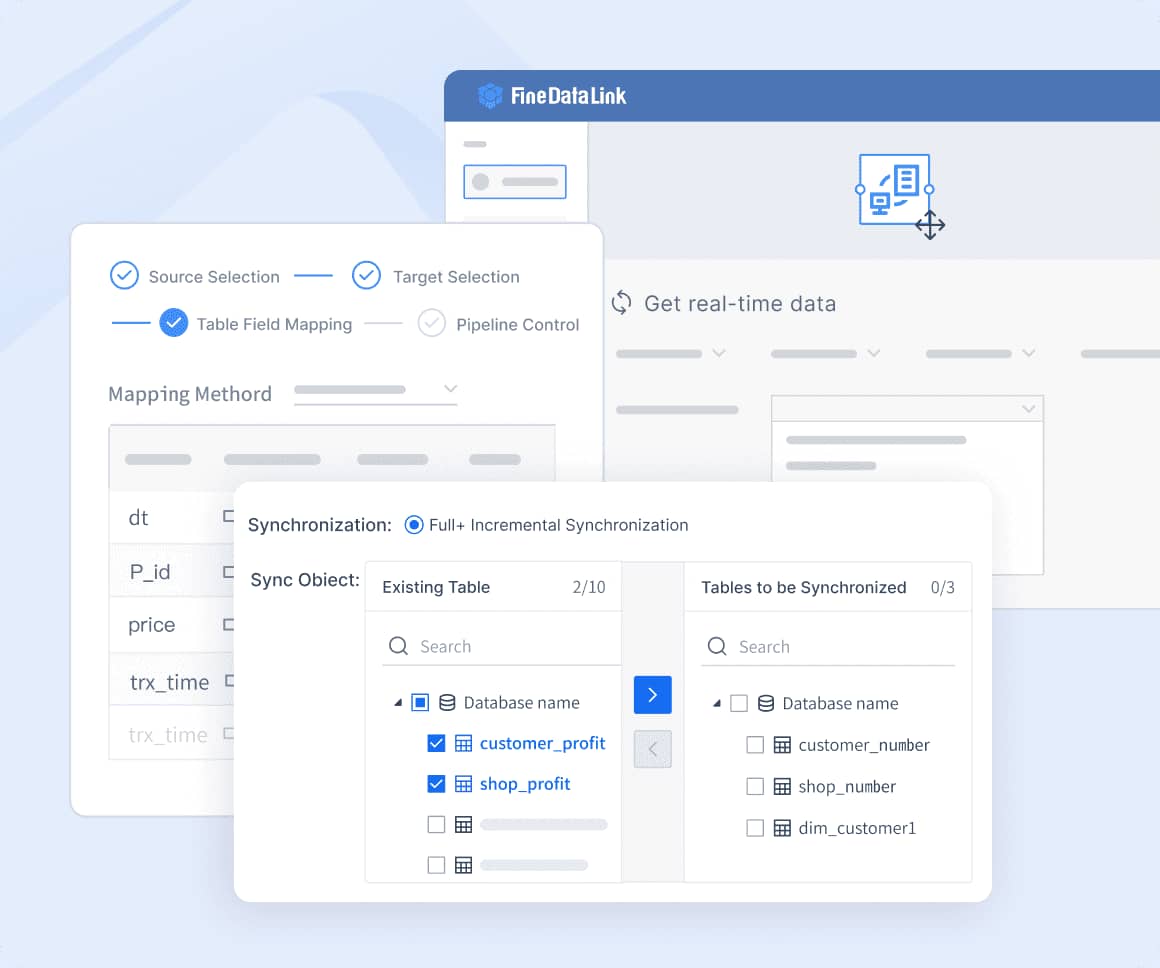

FineDataLink plays a pivotal role in FanRuan's data integration architecture. This enterprise-level platform simplifies complex data integration tasks, making it an ideal choice for businesses seeking efficient solutions. FineDataLink's low-code approach allows users to perform real-time data synchronization and advanced ETL & ELT development with ease. The platform's drag-and-drop functionality and detailed documentation enhance user experience, ensuring seamless data integration processes.

FineDataLink supports over 100 common data sources, enabling organizations to integrate and synchronize data across various systems effortlessly. Its real-time data synchronization capabilities reduce latency, typically measured in milliseconds, making it suitable for database migration and backup. The platform also facilitates the construction of both offline and real-time data warehouses, supporting comprehensive data management and governance.

Company Information:

- FineDataLink has enabled the successful delivery of 1,000 data projects.

- It fully integrates with existing information systems, enhancing operational efficiency.

FineDataLink's robust features, such as API integration and real-time data pipelines, empower organizations to build agile and resilient data architectures. By automating data workflows, FineDataLink reduces manual intervention, allowing businesses to focus on strategic initiatives. This approach not only enhances productivity but also ensures that organizations can respond swiftly to changing market conditions.

Data integration architecture forms the backbone of modern data management, ensuring seamless data flow and accessibility. Organizations should focus on several best practices to build a robust architecture:

Continuous evaluation and adaptation remain crucial. Regularly reviewing the architecture allows organizations to incorporate new insights and technologies, fostering innovation and maintaining a competitive edge.

Click the banner below to try FineDataLink for free and empower your enterprise to transform data into productivity!

Essential Data Integration: A Beginner's Guide

Top Data Integration Tools: 2025 Guide

Top 10 Data Integration Software for 2025

What is API Data Integration? API vs Data Integration

Best Data Integration Platforms to Use in 2025

Enterprise Data Integration: A Comprehensive Guide

Top 7 Data Integration Patterns for Modern Enterprises

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

10 Best Data Orchestration Tools for 2025 You Should Know

Compare the best data orchestration tools for 2025 to streamline workflows, boost automation, and improve data integration for your business.

Howard

Nov 28, 2025

10 Best Enterprise ETL Tools for Data Integration

Compare the 10 best enterprise ETL tools for data integration in 2025 to streamline workflows, boost analytics, and support scalable business growth.

Howard

Oct 02, 2025

What is Real Time Data Integration and Why It Matters

Real time data integration connects systems for instant, accurate data access, enabling faster decisions, improved efficiency, and better customer experiences.

Howard

Sep 24, 2025